To help blind drivers, no. To help AI, yes.

- 4 Posts

- 129 Comments

71·27 days ago

71·27 days agoI think it’s more likely a compound sigmoid (don’t Google that). LLMs are composed of distinct technologies working together. As we’ve reached the inflection point of the scaling for one, we’ve pivoted implementations to get back on track. Notably, context windows are no longer an issue. But the most recent pivot came just this week, allowing for a huge jump in performance. There are more promising stepping stones coming into view. Is the exponential curve just a series of sigmoids stacked too close together? In any case, the article’s correct - just adding more compute to the same exact implementation hasn’t enabled scaling exponentially.

2·1 month ago

2·1 month agoThere used to be very real hardware reasons that upload had much lower bandwidth. I have no idea if there still are.

1·1 month ago

1·1 month agoYeah, but they encourage confining it to a virtual machine with limited access.

5·1 month ago

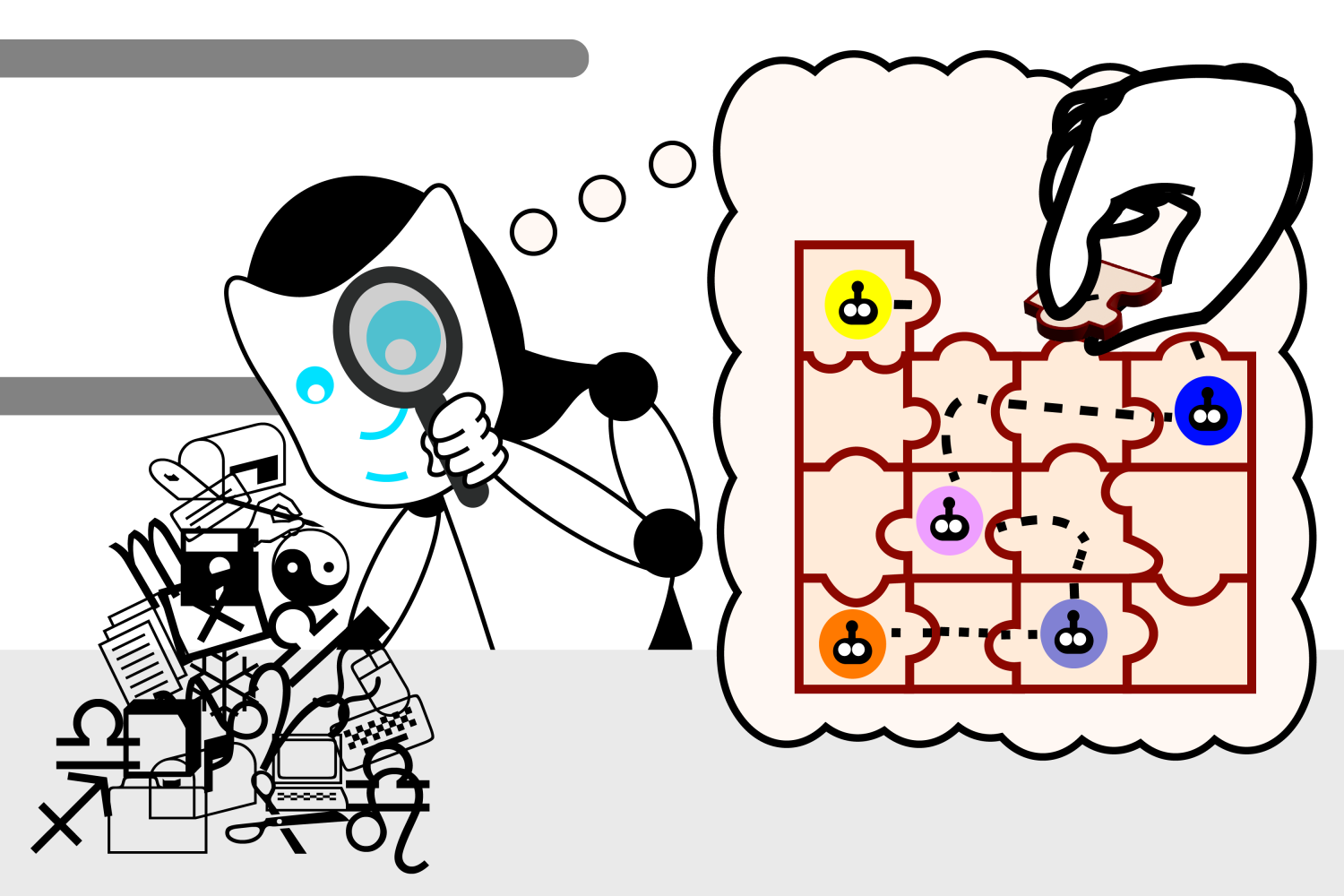

5·1 month agoLogic and Path-finding?

389·2 months ago

389·2 months agoShithole country.

Yeah, using image recognition on a screenshot of the desktop and directing a mouse around the screen with coordinates is definitely an intermediate implementation. Open Interpreter, Shell-GPT, LLM-Shell, and DemandGen make a little more sense to me for anything that can currently be done from a CLI, but I’ve never actually tested em.

I was watching users test this out and am generally impressed. At one point, Claude tried to open Firefox, but it was not responding. So it killed the process from the console and restarted. A small thing, but not something I would have expected it to overcome this early. It’s clearly not ready for prime time (by their repeated warnings), but I’m happy to see these capabilities finally making it to a foundation model’s API. It’ll be interesting to see how much remains of GUIs (or high level programming languages for that matter) if/when AI can reliably translate common language to hardware behavior.

Can I blame Trump on 9/11 or something?

Aren’t they in Macy’s now? Wait, is Macy’s still a thing?

The next generation?

In its latest audit of 10 leading chatbots, compiled in September, NewsGuard found that AI will repeat misinformation 18% of the time

70% of the instances where AI repeated falsehoods were in response to bad actor prompts, as opposed to leading prompts or innocent user prompts.

28·2 months ago

28·2 months agoTo be clear, it’ll be 10-30 years before AI displaces all human jobs.

112·3 months ago

112·3 months agoan eight-year-old girl was among those killed

32·3 months ago

32·3 months agoCalling what attention transformers do memorization is wildly inaccurate.

*Unless we’re talking about semantic memory.

49·3 months ago

49·3 months agoIt honestly blows my mind that people look at a neutral network that’s even capable of recreating short works it was trained on without having access to that text during generation… and choose to focus on IP law.

21·3 months ago

21·3 months agoThe issue is that next to the transformed output, the not-transformed input is being in use in a commercial product.

Are you only talking about the word repetition glitch?

23·3 months ago

23·3 months agoHow do you imagine those works are used?

It’s probably a vision model (like this) with custom instructions that direct it to focus on those factors. It’d be interesting to see the instructions.